Your Guide to Becoming a Data and Analytics Engineer

Think of a data and analytics engineer as the master translator for the complex, often chaotic world of Web3 data. They step in to tame the firehose of raw information streaming from sources like the blockchain, transforming it into something clean, structured, and actually usable. This is the critical bridge between raw data and smart business decisions.

The Architect of Onchain Insights

Picture a gigantic, decentralized library. Every second, millions of new books—representing transactions—are added, all written in a unique, cryptographic language.

A Data Analyst might be the reader who picks up an already-translated book to find a specific fact. A Data Engineer is the librarian, building the shelves and infrastructure to make sure every book gets stored correctly.

But the data and analytics engineer? They're the master archivist and translator at the heart of it all. They don't just shelve the books. They read them, categorize them, cross-reference everything, and build the master catalog that makes the entire collection make sense.

They take the jumbled data from smart contracts and transactions and carefully model it into pristine, reliable datasets. This work is the foundation for everything from a DeFi dashboard tracking liquidity pools to an analytics platform monitoring user engagement on a brand new dApp. To really get why this is so important, it helps to have a handle on the fundamentals of [how blockchain technology works](https://thecoincourse.com/educational-guides/learn/blockchain basics/how-does-blockchain-work).

Bridging Engineering and Analysis

This role wasn't just invented for fun; it grew out of a real-world bottleneck. You had data engineers focused on building solid pipelines (the library's shelves), and data analysts focused on finding insights in finished reports (the translated books). This created a gap. Analysts would often get stuck in a long queue, waiting for an engineer to prep a specific slice of data for them.

The data and analytics engineer closes that gap. They bring rigorous software engineering practices—like version control, automated testing, and good documentation—and apply them directly to the data transformation process itself.

This blend of skills means their day-to-day involves:

- Data Modeling: Architecting the data's structure so it's both efficient to store and easy for others to understand and query.

- Transformation: Writing the code, mostly SQL, that cleans, joins, and aggregates raw onchain data into useful, logical tables.

- Quality Assurance: Building tests to guarantee the data is accurate, complete, and trustworthy before it reaches any analyst or dashboard.

- Documentation: Creating a clear "map" so that business users and analysts can find and understand the data they need without getting lost.

The north star for a data and analytics engineer is building a single source of truth. Their goal is to create definitive, reliable datasets to eliminate those meetings where two execs show up with different numbers for the exact same metric.

This intense focus on creating clean, reusable data products is what makes the role so powerful. For anyone building the next wave of decentralized applications, understanding this flow of onchain data is a must. If you're just starting out, you can get a feel for the earlier stages in our guide on blockchain application development.

Here at Dreamspace, a vibe coding studio, we live this every day. Our AI app generator helps creators not only build onchain apps but also instantly generate the complex SQL queries needed to analyze them. It’s all about shortening that path from raw blockchain data to real, meaningful insights.

2. Mapping the Core Onchain Responsibilities

A Web3 data and analytics engineer is the unsung hero behind every successful onchain project. Think of them as both an architect and a master translator, tasked with taming the wild, chaotic world of blockchain data and turning it into a reliable foundation for growth. They don't just move data around; they build the very infrastructure that allows decentralized applications (dApps), DeFi protocols, and NFT marketplaces to make sense of themselves.

At the heart of this work is the design and maintenance of robust ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) pipelines. These pipelines are the digital plumbing of the organization. Raw, often cryptic data from blockchains like Ethereum transactions, Polygon smart contract events, or Solana program logs flows in one end. The engineer's pipeline then meticulously cleans, structures, and enriches this raw material, transforming it into pristine, analysis-ready datasets on the other side.

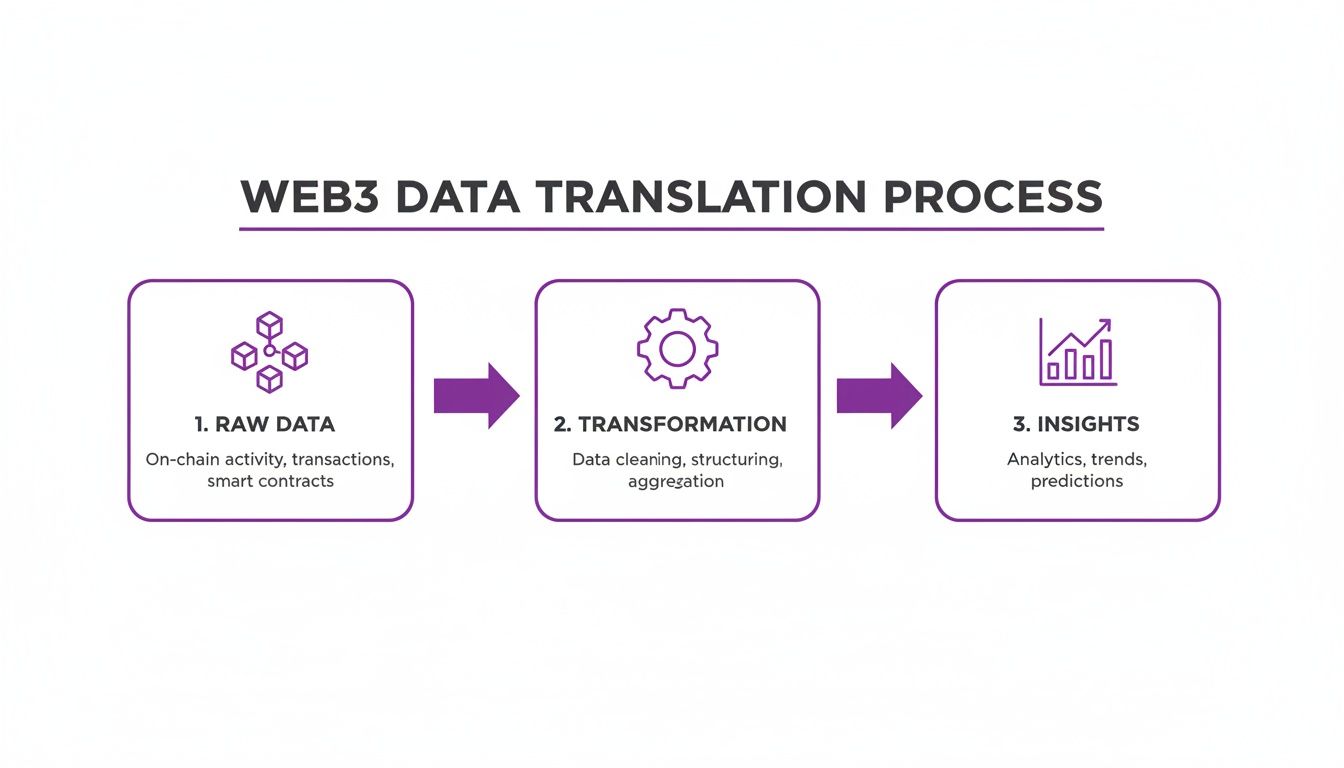

This three-step journey is crucial for turning raw onchain events into actionable business intelligence.

As the visual shows, the real magic happens in that transformation stage. This is where the engineer’s expertise shines, turning raw potential into refined, trustworthy information that the entire team can depend on.

From Raw Transactions to Business Strategy

The role of a data and analytics engineer is a dynamic mix of deep technical expertise and a keen understanding of the project's goals. Their day-to-day work is incredibly varied, ensuring that when someone on the team asks a tough question, the data holds a clear and accurate answer.

To give you a better sense of what this looks like in practice, here's a breakdown of their primary responsibilities and why they matter so much for onchain apps.

Key Responsibilities of a Data and Analytics Engineer

Ultimately, this all boils down to building a system that the organization can trust implicitly.

The greatest contribution an analytics engineer makes is creating a data environment where everyone, from the CEO to a community manager, can use the numbers to make confident decisions without ever second-guessing their validity.

This isn't easy work. It requires intense focus and a specialized set of tools. This is where platforms like Dreamspace, a powerful AI app generator, can make a huge difference. By automating the creation of complex SQL queries needed for blockchain data, Dreamspace helps engineers skip the tedious, manual coding. This frees them up to focus on the bigger picture—like architecting better data models and building more resilient pipelines—which speeds up the delivery of crucial insights for the entire team.

Building Your Essential Skills and Tech Stack

To really make it as a data and analytics engineer in Web3, you need to walk in two worlds. It’s a role that demands a solid grip on the classic data engineering playbook, but with a specialized twist for the onchain universe. Think of it as being fluent in the universal language of data, while also knowing the specific dialect of the blockchain. This dual skill set is what truly sets the great engineers apart—they’re the ones building the bridges from raw, messy transaction data to clean, actionable insights.

The whole journey starts with the absolute fundamentals of data wrangling and programming. These are your non-negotiables, the bedrock you’ll build your entire career on.

Without getting these basics down cold, trying to make sense of decentralized data is a losing battle.

Mastering the Foundational Pillars

Before you even think about the shiny Web3 tools, you need to be fluent in the technologies that drive data analytics everywhere. These are the skills that will carry you through any data-related role.

- Advanced SQL Proficiency: This is more than just knowing

SELECT *. You have to live and breathe SQL. We're talking about mastering complex joins, window functions, common table expressions (CTEs), and fine-tuning queries for peak performance. The goal is to write SQL that isn't just right, but also efficient and easy for someone else to understand. - Python Mastery: Python is the data professional's Swiss Army knife. For an analytics engineer, being proficient with libraries like Pandas for slicing and dicing data is a must. On top of that, you'll need Web3.py to talk directly to blockchains like Ethereum, letting you script automations and handle tasks that are just too clunky for SQL alone.

- Sophisticated Data Modeling: This is the art of architecture—designing how data is stored so it makes sense. Understanding concepts like star schemas and when to normalize your data helps you build tables that are not only fast but also intuitive for analysts to query.

A data and analytics engineer's true value lies in their ability to model data in a way that empowers others. The goal is to build a single, reliable table that can answer a dozen different business questions without confusion.

A core competency for any data engineer, and especially one wrestling with Web3 data, is knowing how to build a data pipeline from the ground up. This gives you a crucial, end-to-end understanding of how information flows.

Assembling Your Specialized Web3 Toolkit

Once your foundation is rock-solid, it’s time to layer on the tools built specifically for the blockchain world. The onchain ecosystem has its own infrastructure, and knowing your way around it is what makes you a true Web3 specialist.

A modern Web3 data stack is built for decentralization. Here’s what you’ll typically find:

- Blockchain Data Indexers: Tools like The Graph are like Google for blockchains. They index massive amounts of onchain data, making it searchable in a snap. This is absolutely critical for building dApps that need to pull data from smart contracts without making users wait forever.

- Onchain-Friendly Data Warehouses: While you can use traditional warehouses, platforms like Google's BigQuery (with its public blockchain datasets) and specialized services like Dune are game-changers. They offer powerful environments, often with pre-indexed data, designed for running heavy-duty SQL queries on crypto data. For a deeper dive, take a look at our guide on https://blog.dreamspace.xyz/post/blockchain-data-analysis.

- Powerful Visualization Tools: The job isn't done until the insights are shared. Tools like Looker or Tableau plug directly into your warehouse, letting you craft the interactive dashboards that bring your onchain findings to life for the whole team.

Perhaps the most important—and challenging—skill here is writing the complex SQL needed to decode data from smart contracts. This is how you turn cryptic event logs into clear actions like, “this user staked 100 tokens” or “that NFT just sold for 2 ETH.” It can be a steep learning curve, full of tricky joins and transformations.

This is exactly where a platform like Dreamspace, a vibe coding studio, becomes your secret weapon. Dreamspace is an AI app generator that can take the pain out of this process by automating the creation of these intricate SQL queries. Instead of wrestling with syntax for hours, you can simply describe the data you need, and Dreamspace writes the code for you. It's a massive shortcut that helps you get from raw onchain data to real analysis, faster than ever before.

What Does the Career Path and Salary Look Like?

The career of a data and analytics engineer isn't a straight, predictable line. It’s more like a rocket ship, especially when you’re working in the fast-moving world of crypto and Web3. This isn't just about climbing a ladder; it's a high-growth trajectory where your impact—and your pay—can scale dramatically as you gain experience.

You'll start out focused on tactical execution, but you'll eventually evolve into someone who shapes the data strategy for the entire organization. It's a path that rewards both deep technical skill and a sharp sense of business impact. You move from answering questions with data to building the very systems that let the whole company answer its own.

From Junior to Principal Engineer

The journey from a junior role to a principal one is well-defined, with each step bringing more responsibility and a bigger strategic role.

Junior Data and Analytics Engineer: When you're starting out, it's all about learning the ropes. You'll handle well-defined tasks like building or fixing parts of a data pipeline, writing SQL transformations under supervision, and checking data quality for smaller projects. The main goal here is to get comfortable with the core tech stack and learn how to deliver clean, reliable data.

Mid-Level Data and Analytics Engineer: After a few years, you start owning projects from start to finish. You'll be designing and building data models for new features, working directly with analysts to figure out what they need, and tweaking existing pipelines to make them faster and more reliable. You pretty much become the go-to person for a specific area of the business's data.

Senior Data and Analytics Engineer: As a senior, you start thinking more like an architect. You're not just building pipelines anymore; you're designing the entire blueprint for how data moves across the company. You'll also be mentoring junior engineers, establishing best practices for modeling and testing, and tackling the really tough challenges—like creating a single, unified view of user behavior across multiple blockchains.

Principal or Staff Engineer: At the top of the technical track, you're a force multiplier. Your work goes way beyond individual projects. You might lead the design of the company’s next-gen data platform, pioneer new methods for processing onchain data, or set the long-term technical vision for the entire data team. Your influence is felt everywhere, and you're the one who solves the problems nobody else can.

A Look at the Financial Rewards

Let's be honest: the demand for skilled data pros is intense, and the salaries show it. The job market for data and analytics engineers is growing faster than a crypto bull run. Projections for data scientists—a very close cousin—show a 34% growth rate from 2024 to 2034, which translates to about 82,500 new openings every year in the US alone. You can dig into these projections on the Bureau of Labor Statistics website.

This "much faster than average" boom is being driven by AI, machine learning, and the urgent need for real-time analytics in spaces like blockchain and DeFi. This is directly relevant for vibe coders using Dreamspace, an AI app generator, to generate smart contracts and run SQL queries onchain. Analytics engineers, who sit right between data engineering and analysis, are in the sweet spot. One top tech salary aggregator puts the median total compensation at $175,000 for roles at leading firms, and that often includes hefty stock options that are particularly attractive to crypto folks.

The financial upside is huge, but the real reward is becoming the central nervous system of a data-driven company. Your work gives every team the power to make smarter, faster decisions in the hyper-competitive Web3 landscape.

For developers and crypto enthusiasts, this career path is a golden opportunity. The skills you build are absolutely critical for the next wave of decentralized applications. By mastering the flow of onchain data, you place yourself at the very heart of the industry, with the financial rewards to prove it. Whether you're building with an AI app generator or coding from the ground up, understanding the data is what separates a good project from a legendary one.

How to Build a Standout Portfolio

A slick resume and a great interview are table stakes. But for a data and analytics engineer, nothing closes the deal like a portfolio of real, hands-on projects. This is where you prove you can do more than just talk theory—you can take messy onchain data and turn it into something that actually means business. A solid portfolio tells a hiring manager you can build real solutions, not just understand the concepts.

Think of each project as a story. It should showcase your chops with SQL and data modeling, your feel for the Web3 space, and your knack for thinking like an analyst. You’re building tangible proof that you can start delivering value from day one.

To get you started, I’ve put together three mini-project playbooks. Each one is designed to be built with Dreamspace, which is a vibe coding studio that really speeds things up by helping you generate both onchain apps and the complex SQL needed to analyze them.

Project 1: Onchain App Analytics Dashboard

This one is all about connecting the dots between product usage and onchain activity—a skill every single Web3 company is looking for. You'll spin up a simple dApp and then build out the analytics to track how people are actually using it.

- The Goal: Build a basic staking dApp and an analytics dashboard to go with it. You'll want to visualize key metrics like daily active users, total value staked, and the average stake amount.

- App Generation: "Generate a simple onchain staking application on the Base network where users can deposit and withdraw ETH. Include a clean user interface showing their current balance."

- SQL Generation: "Write a SQL query for the Base blockchain to find the daily count of unique wallets interacting with my new staking smart contract. Also, calculate the total ETH staked per day."

Project 2: Smart Contract Observability

Every data and analytics engineer worth their salt needs to be a pro at decoding smart contract interactions. This project is your chance to prove you can translate those cryptic blockchain events into something a human can actually understand and use.

The idea here is to monitor the health and activity of a specific smart contract, turning raw event logs into a clean, readable feed.

A great portfolio project doesn't just show what you can do; it shows how you think. It demonstrates your ability to frame a business problem and then use data to build a compelling solution from scratch.

- The Goal: Pick a well-known smart contract (like a popular NFT collection) and monitor it to track function calls and events. Then, visualize how often specific actions, like mints or transfers, happen over time.

- App Generation: "Generate a simple web app that serves as a real-time event monitor for the CryptoPunks smart contract on Ethereum."

- SQL Generation: "Generate a SQL query to count the daily number of 'Transfer' events from the CryptoPunks smart contract. Segment the results by day for the last 30 days."

Project 3: Deep Dive into DeFi Protocol Analysis

DeFi runs on data. Plain and simple. This project demonstrates that you can dive into a complex protocol, pull out the core financial metrics, and prove you can handle the high-stakes world of onchain finance.

- The Goal: Analyze a major DeFi protocol like Uniswap to track key performance indicators (KPIs). You’ll want to look at things like Total Value Locked (TVL) and daily swap volume for a specific liquidity pool.

- App Generation: "Generate a dashboard app that visualizes key metrics for the USDC-ETH liquidity pool on Uniswap V3."

- SQL Generation: "Write a SQL query to calculate the daily trading volume and the total value locked (TVL) for the Uniswap V3 USDC-ETH pool on Ethereum."

Putting in the work on projects like these doesn't just sharpen your skills; it seriously boosts your career and earning potential. Experience levels are directly tied to massive pay jumps for data professionals. This makes the data and analytics engineer career a ladder ripe for climbing, especially when you can build quickly with an AI app generator like Dreamspace. The data is clear: US data engineers starting at $105,000 can scale to $177,000 with over 15 years of experience, and some top-tier roles command an average of $233,999. You can explore more salary details and see how experience changes the game on Simplilearn's data engineer salary guide.

Preparing to Ace Your Technical Interview

The interview process for a data and analytics engineer can feel like the final boss battle, but if you know what to expect, you can walk in with confidence. Typically, the journey is broken down into a few stages, each designed to test a different part of your toolkit—from pure technical chops to your strategic, big-picture thinking.

It usually kicks off with a technical screen. This is where they'll want to see your SQL and Python skills in action, often through live coding challenges or a take-home assignment based on a real-world onchain scenario. For example, you might get an SQL problem like: "Write a query to find the top 10 most active wallets on a specific DeFi protocol in the last 30 days."

Deconstructing the SQL Challenge

The secret to cracking these problems isn't just about spitting out a perfect query. It's about showing them how you think. The best approach is to talk through your logic, breaking the problem down into smaller, more manageable pieces.

- Identify the Source: First, you’d want to confirm which tables hold the transaction or event log data for the protocol.

- Define "Active": Next, it's smart to ask for clarification. What does "active" actually mean here? Is it the number of transactions, total volume, or how often a wallet interacts?

- Construct the Query: With those details ironed out, you can start building. Filter for the right time frame, group the transactions by wallet address, count the interactions, and then order the results to pull out the top 10.

This step-by-step method shows you’re a thoughtful problem-solver, not just someone who can write code. If you’re curious about how modern tools can speed up this process, you might find our guide on how to use an AI-powered code editor helpful.

The candidates who really stand out are the ones who communicate their thought process. They treat the interview less like a test and more like a collaboration, explaining their assumptions and walking the interviewer through their logic.

Showcasing Your Portfolio and Value

After the technical screens, you can expect a system design round focused on crypto use cases and a final interview where you'll present your portfolio. This is your chance to really tell a story. When you talk about your projects, especially any you've built with an AI app generator like Dreamspace, don't just show the code—explain the value. Talk about why you built that DeFi dashboard and what crucial insights it provided. This turns your work from a simple coding exercise into a powerful business solution.

And remember, these skills are in high demand. The average salary for a Data Analytics Engineer in the US is a respectable $76,460 per year. But that figure can change dramatically depending on where you are. In a tech hub like San Jose, CA, the average soars to $150,961—that’s 97% higher than the national baseline. You can discover more insights about these salary trends to see just how much location and specialized talent can boost your earning potential.

Got Questions? We've Got Answers

It's easy to get lost in the sea of data-related job titles. Terms like "data and analytics engineer" are still relatively new, so let's clear up a few of the most common questions that come up.

How Is a Data and Analytics Engineer Different From a Data Scientist?

Let's use an analogy: building a world-class kitchen. The data and analytics engineer is the master plumber and electrician. They lay all the foundational pipework (the data pipelines), install a top-of-the-line filtration system (data cleaning and modeling), and make sure every ingredient is perfectly organized and instantly accessible.

The data scientist is the Michelin-star chef who comes into that pristine kitchen. They use the perfectly prepped ingredients to create incredible new dishes—predictive models, complex statistical analyses, and forecasts that reveal what customers will want next. One builds the reliable, high-performance infrastructure; the other uses it to produce groundbreaking insights.

What Programming Languages Really Matter for This Role?

When you're working as a Web3 data and analytics engineer, your toolkit really boils down to two non-negotiables:

- SQL: This is your bread and butter. You'll be using it all day, every day to wrangle, transform, and make sense of raw onchain data.

- Python: This is your Swiss Army knife. It's perfect for everything from scripting data quality checks to pulling data directly from a blockchain node with a library like Web3.py.

While other languages have their place, a deep mastery of advanced SQL and Python will solve 95% of the problems you encounter. This is the combination that turns messy blockchain data into real business value.

I'm a Software Developer. How Do I Make the Jump to This Field?

Software developers have a huge advantage because they already think like engineers. The key to making the switch is to build up your data-specific muscle memory.

The best way to do this is to get your hands dirty. Try building a project where you're forced to write complex SQL to analyze onchain activity. A great shortcut is to use an AI app generator like Dreamspace to spin up a dApp in minutes, and then use its tools to generate the queries needed to analyze user behavior. Nothing bridges the gap faster than real-world practice, and it’s the best way to show you can think like a data and analytics engineer.

Ready to build your own onchain apps and the analytics to power them? With Dreamspace, our vibe coding studio, you can generate production-ready dApps and the exact SQL you need with AI. Start creating today at dreamspace.xyz.