12 Best LLM for Coding: Developer Picks for 2025

The world of software development is undergoing a fundamental shift, with Large Language Models (LLMs) at the forefront. These powerful tools are no longer just for generating boilerplate code; they are now capable of debugging complex algorithms, translating between programming languages, and even scaffolding entire applications. With this explosion of AI capabilities, the challenge has shifted from if you should use an AI assistant to which one you should choose.

Navigating the crowded market to find the best LLM for coding can feel overwhelming. This guide is designed to cut through the noise and provide clear, actionable insights. We offer a detailed, hands-on review of the 12 leading platforms and models available today, complete with screenshots and direct links. As you delve into selecting the best LLM for your coding needs, gaining a deeper understanding of overarching applications can inform your choice, and this article on the principles of Conversational AI provides excellent context.

We will explore their specific strengths, honestly assess their limitations, and show you how to integrate them into your workflow. Whether you're a professional developer, a crypto enthusiast building on the blockchain, or a "vibe coder" using an innovative platform like the Dreamspace AI app generator, this list will help you find the perfect AI coding partner. Let’s get started.

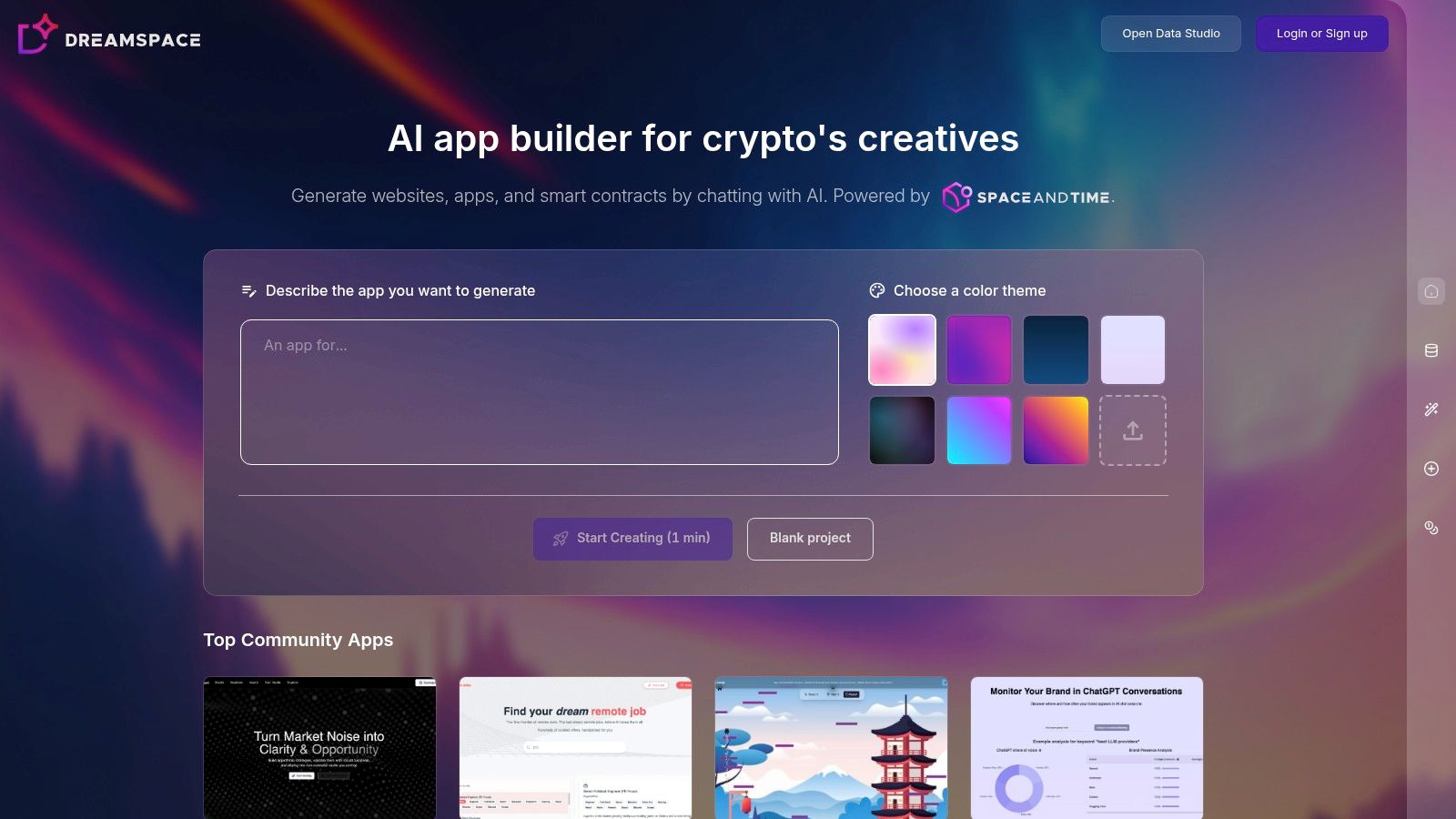

1. Dreamspace

Dreamspace distinguishes itself as a premier AI app generator, earning its place as the top choice for developers seeking an integrated, no-code solution for web3 applications. It is not just an LLM for coding; it's a comprehensive "vibe coding" studio that translates natural language prompts into fully functional, production-ready on-chain apps. This platform generates the front-end website, necessary smart contracts, and even the blockchain SQL queries required for data interaction, all from a simple chat-style input.

The platform is engineered for speed and creativity, allowing "vibe coders" and crypto creatives to move from an idea to a shareable, deployed application in minutes. This rapid prototyping capability is a significant advantage over traditional development cycles, which often involve separate workflows for front-end, back-end, and smart contract development.

Key Features and Use Cases

Dreamspace's power lies in its end-to-end generation capabilities tailored specifically for the web3 ecosystem.

- Full-Stack Generation: The system handles every layer of the application stack. For example, a user could prompt, "Create a decentralized job board for remote crypto roles," and Dreamspace would generate the user interface, a smart contract to manage listings, and SQL queries to pull relevant data from its Open Data Studio.

- Rapid Prototyping: The advertised "Start Creating (1 min)" flow is a core strength. This makes it an ideal tool for hackathons, validating a startup idea, or quickly building a proof-of-concept for a decentralized application (dApp).

- Community Templates: Users can leverage a library of existing apps like Altquant or RemoteJobs as a starting point, which dramatically accelerates the creation process and provides practical examples of what the platform can build.

Practical Considerations

While Dreamspace excels at speed and accessibility, users should note that pricing information is not publicly listed and requires direct contact. For production-grade applications, it's crucial to perform a manual security review and a formal audit of the generated smart contracts, as the site does not display formal security certifications. This makes it the best LLM for coding prototypes and creative projects where speed is the priority. You can get more familiar with the platform's capabilities by reading about their AI app builder on blog.dreamspace.xyz.

- Website: https://dreamspace.xyz

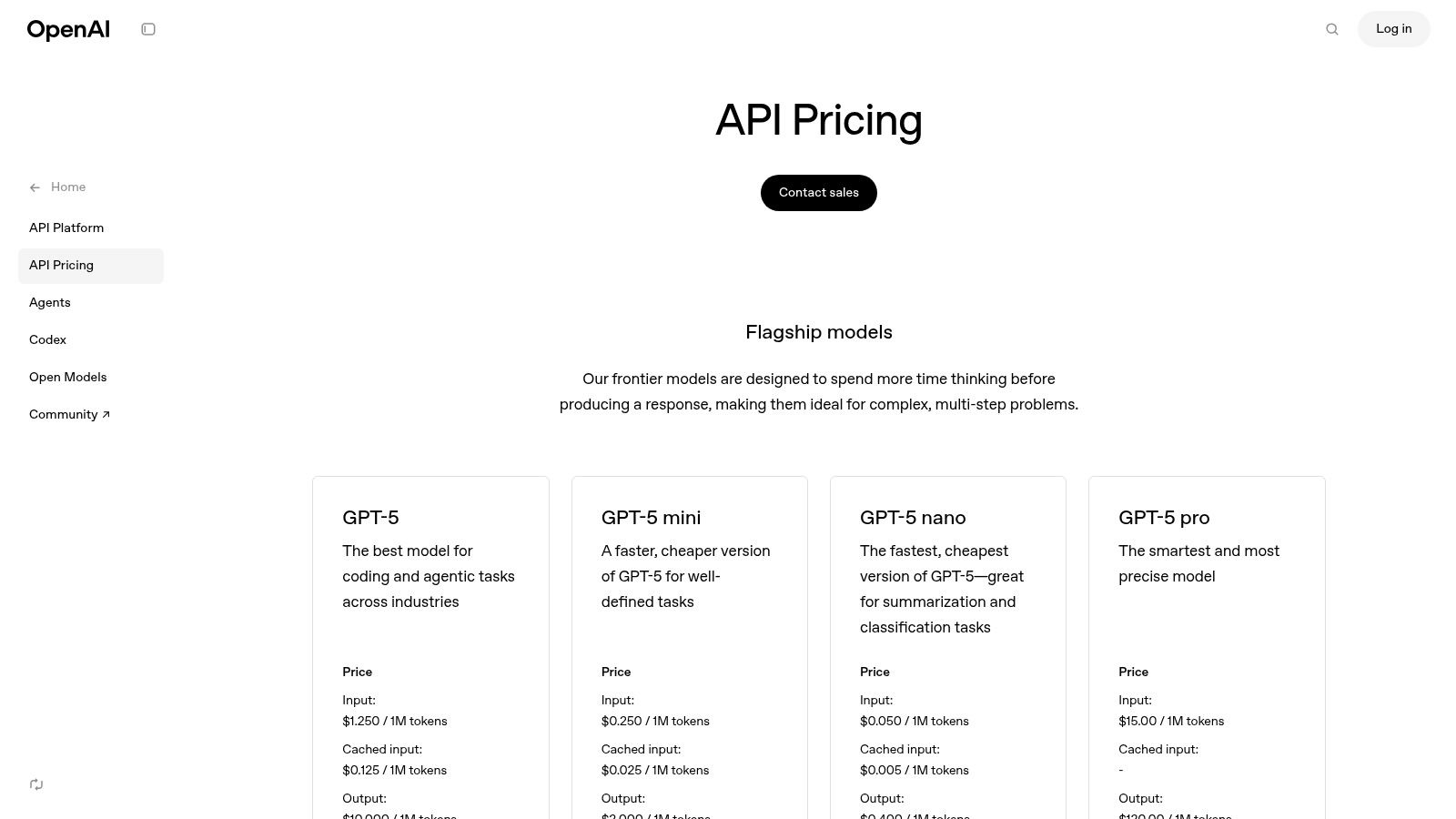

2. OpenAI

OpenAI remains a benchmark for developers seeking the best LLM for coding, providing state-of-the-art models like the GPT-4o and GPT-4.1 families via a robust API. Its platform is not just about raw model access; it’s an integrated ecosystem featuring a developer Playground for rapid prototyping, comprehensive documentation, and the powerful Assistants API for building complex, tool-using agents. This makes it ideal for everything from simple code generation to sophisticated, agentic software development.

For vibe coders and those using an AI app generator like Dreamspace, OpenAI's models provide the high-quality reasoning needed for complex logic and code refactoring tasks. The Code Interpreter feature, which allows models to run generated code in a sandboxed environment, is particularly useful for debugging and data analysis workflows.

Key Considerations

While its performance is top-tier, the token-based pricing can become costly for high-volume applications. Enterprise-level features such as reserved capacity and priority access add further cost but ensure reliability for mission-critical systems.

- Pros: Unmatched code quality and reasoning, mature tooling and a vast developer ecosystem.

- Cons: Premium pricing for high-throughput workloads and complex enterprise features. For a deeper dive into how OpenAI compares with other models, you can learn more about its programming capabilities on the Dreamspace blog.

Website: https://openai.com/api/pricing

3. Anthropic (Claude)

Anthropic’s Claude 3 family of models (Haiku, Sonnet, and Opus) offers a powerful alternative for developers looking for the best LLM for coding, with a strong emphasis on safety and sophisticated reasoning. The platform is accessible via its API and through cloud partners like AWS Bedrock and Google Vertex AI, providing flexible integration options. Its standout feature is the massive 200K token context window, which is exceptional for analyzing and refactoring large, complex codebases without losing context.

This long-context capability is a significant advantage for any AI app generator, including a vibe coding studio like Dreamspace, allowing the model to understand entire projects at once to produce more coherent and context-aware code. The 'Artifacts' feature also provides a unique, no-code environment where users can see and interact with generated code snippets, like a React component, in a live preview pane, streamlining the development and testing workflow.

Key Considerations

Anthropic's API pricing is competitive, especially its cost-effective Haiku model for simpler tasks, but costs can rise when using the more powerful Opus model for extensive code generation. The platform's commitment to "Constitutional AI" ensures safer outputs but might be slightly more constrained than other models for certain edge-case requests.

- Pros: Excellent for long-context code analysis, strong safety features, and innovative tooling like Artifacts.

- Cons: API costs for the top-tier model can accumulate with heavy usage, and safety alignment can sometimes limit output creativity.

Website: https://docs.anthropic.com/en/docs/about-claude/models

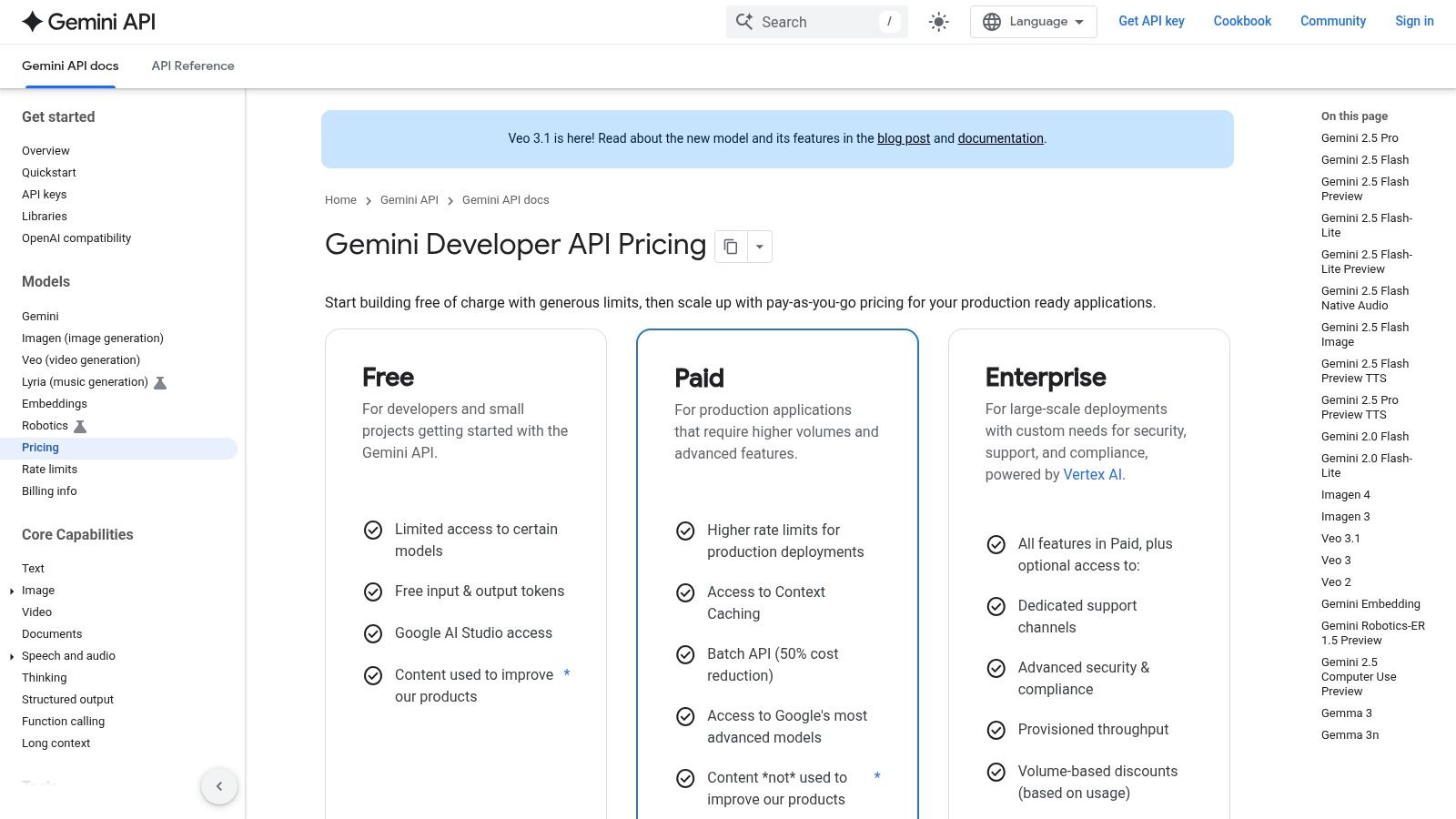

4. Google AI Studio (Gemini)

Google AI Studio offers access to the versatile Gemini model family, making it a powerful contender for the best LLM for coding. Its API-first approach, supported by SDKs and robust integrations, appeals to developers building scalable applications. The platform’s standout feature is its large context window, with models like Gemini 1.5 Pro handling up to 1 million tokens, which is transformative for analyzing and refactoring entire codebases in a single prompt.

This massive context is a game-changer for any AI app generator, enabling a platform like the Dreamspace vibe coding studio to understand complex, multi-file projects for more accurate code generation and architecture suggestions. The ability to ground models with Google Search also provides up-to-date information, which is crucial for working with the latest libraries and frameworks.

Key Considerations

While Google AI Studio provides a generous free tier for getting started, larger-scale enterprise deployments and specific features are best managed through the Google Cloud Platform, which may add a layer of complexity for some teams. The model naming and versioning also tend to evolve quickly.

- Pros: Extremely large context windows, competitive price-performance, and a generous free tier for initial development.

- Cons: Enterprise features may require Google Cloud setup, and the rapid evolution of model names can be confusing.

Website: https://ai.google.dev/pricing

5. Mistral AI

Mistral AI emerges as a strong contender for developers looking for the best LLM for coding with a focus on price-performance. Its specialized Codestral models, available via a transparent, self-serve API, are engineered specifically for code generation, completion, and reasoning tasks. The platform's emphasis on open-source contributions and efficient model architecture makes it a compelling, cost-effective alternative for various development workflows.

For creators using an AI app generator or vibe coding studio like Dreamspace, Mistral's models provide an excellent balance of speed and capability, especially for projects where budget optimization is key. With sizable context windows and straightforward API pricing, it's well-suited for processing large codebases or building chat-based coding assistants without incurring unpredictable costs.

Key Considerations

Mistral's platform includes both API access and hosted chat plans like Le Chat, offering flexibility for individuals and teams. While its ecosystem is still growing compared to giants like OpenAI, its strong performance on coding benchmarks and competitive pricing make it an attractive option for developers prioritizing efficiency and affordability.

- Pros: Strong price/performance for coding tokens, straightforward plans for individuals and teams.

- Cons: Smaller ecosystem compared with OpenAI/Anthropic, model availability and hosting options can vary by region/provider.

Website: https://mistral.ai/pricing

6. Meta Llama

For developers seeking the best LLM for coding with maximum control, Meta Llama offers open-weights models, including the specialized Code Llama family. This approach allows teams to download, fine-tune, and self-host powerful models, granting full sovereignty over data privacy, infrastructure, and customization. It’s the ideal path for building bespoke coding assistants or integrating proprietary logic directly into the model.

This level of control is particularly valuable for platforms like the Dreamspace AI app generator, where developers might want to create a highly specialized, self-hosted coding agent fine-tuned on their own codebase. Meta provides official GitHub repositories and clear licensing terms, enabling commercial use within its guidelines and fostering a strong community ecosystem.

Key Considerations

While the model weights are available at no licensing cost, this route requires managing all operational aspects. Teams are responsible for the infrastructure costs, serving, latency optimization, and scaling, which can be a significant technical and financial undertaking.

- Pros: Full control over hosting and privacy, no model licensing fees, and extensive opportunities for custom fine-tuning.

- Cons: Users must bear the full cost and complexity of infrastructure, and the "open-weights" license includes specific conditions for commercial use.

Website: https://www.llama.com

7. Hugging Face

Hugging Face has become the definitive hub for developers exploring the vast landscape of open-source models, making it an essential resource for finding the best LLM for coding. It hosts the largest catalog of models, including specialized code generators like StarCoder2 and various Llama-based coders. The platform offers a seamless path from experimentation in free playgrounds and demos to production-ready deployments using pay-as-you-go Inference Providers or dedicated Inference Endpoints.

For developers building with an AI app generator or a vibe coding studio like Dreamspace, Hugging Face provides unparalleled flexibility. You can quickly benchmark dozens of models to find the perfect fit for your specific coding task, whether it's autocompletion, bug fixing, or algorithm generation, and then scale it without managing the underlying infrastructure. This makes it an ideal environment for rapid prototyping and deploying custom-tailored coding assistants.

Key Considerations

While the platform offers immense choice, the performance and reliability can vary significantly depending on the specific model and inference provider chosen. Moving to dedicated Inference Endpoints ensures stability and features like autoscaling for production workloads, but the costs can accumulate, especially for models that need to be available 24/7.

- Pros: Unmatched selection of open-source coding models, easy to transition from experimentation to production without managing infrastructure.

- Cons: Performance varies by model and provider, and dedicated endpoint costs can be significant for continuous workloads.

Website: https://huggingface.co

8. GitHub Copilot

As a foundational tool for millions of developers, GitHub Copilot is a strong contender for the best LLM for coding, offering deep integration directly within popular IDEs. It goes beyond simple autocompletion, providing inline code suggestions, a powerful chat interface for debugging, and automated assistance with code reviews. Copilot is designed to feel like a natural extension of the developer's workflow, minimizing context switching and boosting productivity.

For developers using an AI app generator or a vibe coding studio like Dreamspace, Copilot acts as an ever-present pair programmer, accelerating the development of both frontend and backend components. Its strength lies in its tight coupling with the GitHub ecosystem, understanding the context of your repository to provide more relevant and accurate suggestions.

Key Considerations

Copilot is available through various plans, from a free tier for students to business and enterprise solutions. Access to more advanced features, like its agent mode or a rotating selection of top-tier models, is often gated by the premium plans. This model-agnostic approach can be a benefit, but it also means the underlying model's performance can change over time.

- Pros: Unbeatable integration with GitHub and major IDEs, comprehensive developer productivity tooling.

- Cons: Access to the most advanced models is tied to premium plans, and the specific model lineup can be inconsistent. You can explore how it compares to other IDE-centric tools in our list of Cursor alternatives.

Website: https://github.com/features/copilot

9. Amazon Bedrock

Amazon Bedrock serves as a managed AWS service, offering a unified gateway to many of the best LLMs for coding from leading providers like Anthropic, Meta, and Mistral. Rather than being a single model, it's a powerful orchestration layer that allows developers to access and manage multiple foundation models through a single API. This is ideal for enterprises already invested in the AWS ecosystem, providing a streamlined way to experiment with and deploy various models under one consolidated bill.

For teams using an AI app generator like the Dreamspace vibe coding studio, Bedrock's flexibility allows them to select the most cost-effective or highest-performing model for specific coding tasks, from generating boilerplate to complex algorithm design. Features like Knowledge Bases for RAG, Guardrails, and deep integration with AWS services (like VPC and IAM) make it a secure and scalable choice for building enterprise-grade applications.

Key Considerations

While the multi-model approach is a significant advantage, pricing can be complex as it varies by the chosen model, usage type (on-demand vs. provisioned), and additional features. Developers should also be aware that imported models might have different scaling characteristics or cold-start times compared to native AWS services.

- Pros: Consolidated access and billing for multiple top-tier models, strong enterprise governance and seamless integration with the AWS stack.

- Cons: Pricing can be complex and varies significantly between models, and model-specific performance nuances can require careful management.

Website: https://aws.amazon.com/bedrock

10. Microsoft Azure OpenAI Service

For organizations building on Microsoft's cloud infrastructure, Azure OpenAI Service offers the best LLM for coding through enterprise-grade deployments of OpenAI's premier models like GPT-4o. This service wraps OpenAI's powerful technology within Azure's robust security, compliance, and private networking framework. It is specifically designed for businesses requiring strict data governance and predictable performance for mission-critical applications.

The integration with Azure AI Studio and the wider Microsoft 365 ecosystem creates a unified development environment. For those building with an AI app generator like the Dreamspace vibe coding studio, this means leveraging top-tier models for complex code generation and logic while adhering to stringent corporate IT policies. Features like provisioned throughput and reserved capacity ensure stable performance and predictable costs at scale.

Key Considerations

Azure's pricing can be complex, as costs for models like GPT-4o vary by region and are often tied to specific deployment configurations. While this provides deployment flexibility, it requires careful planning using the Azure pricing calculator to estimate total expenses for high-throughput coding tasks.

- Pros: Enterprise-grade SLAs, compliance, and identity controls; flexible deployment topologies with global data-zone options.

- Cons: Pricing is more complex than direct API access and varies by region; some provisioning details depend on specific usage patterns.

Website: https://azure.microsoft.com/pricing/details/cognitive-services/openai-service

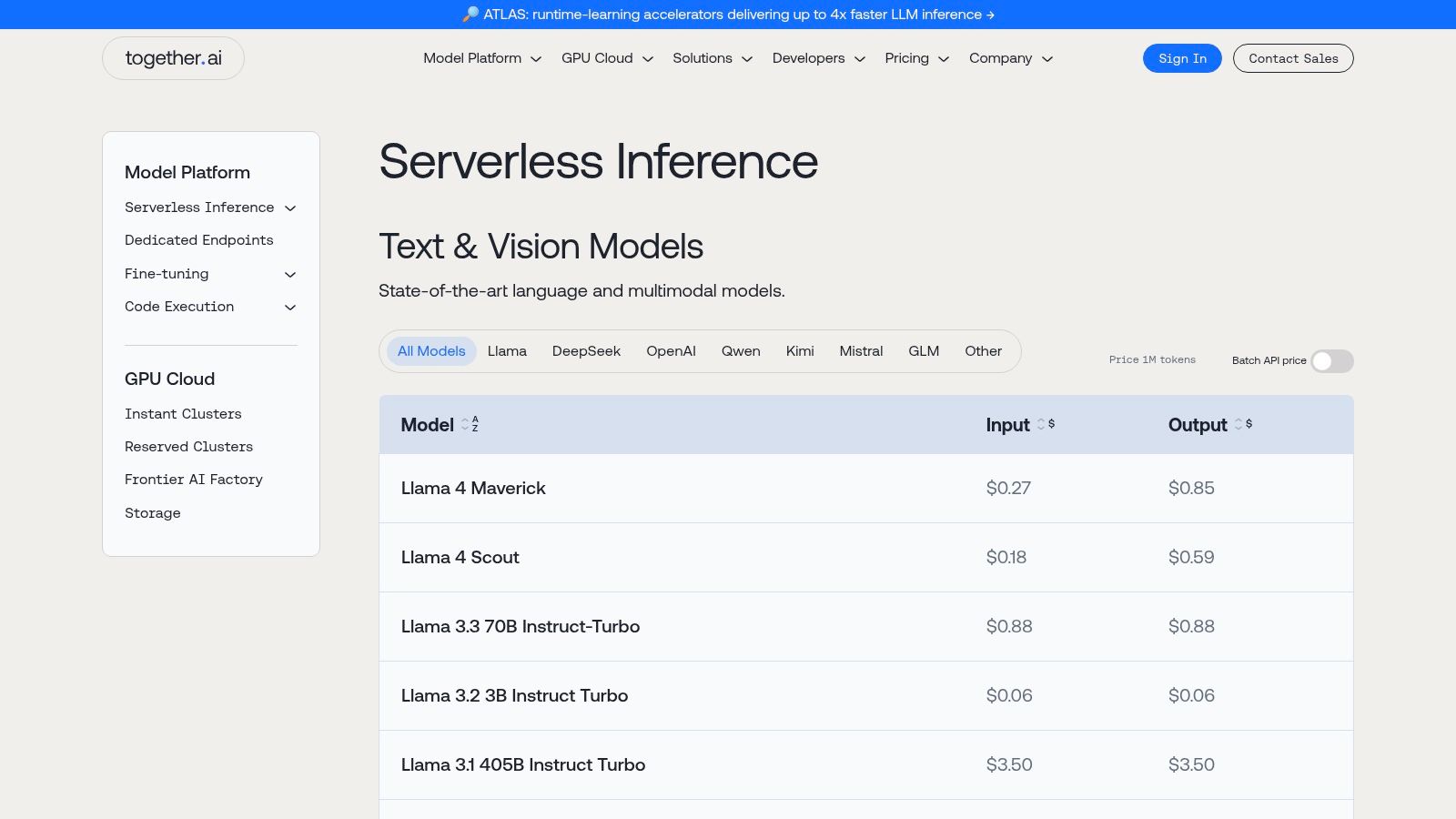

11. Together AI

Together AI positions itself as a cloud platform for developers who need maximum flexibility and performance, offering one of the best LLM for coding selections through its infrastructure. The platform provides access to a vast catalog of leading open-source models, enabling developers to run hosted inference, perform fine-tuning (SFT/DPO), and deploy on dedicated GPU endpoints for production workloads. This makes it a powerful choice for teams wanting control over their model stack without managing the underlying hardware.

For developers using an AI app generator or a vibe coding studio like Dreamspace, Together AI offers a robust backend for running specialized, open-source coding models that can be fine-tuned for specific programming languages or domains. A standout feature is its sandboxed Code Interpreter API, allowing for the safe execution of generated code, which is invaluable for building complex agents or interactive coding assistants that require verification and debugging capabilities.

Key Considerations

While offering great flexibility, the platform requires developers to manage the tradeoffs between different models, latency, and performance. The transparent pricing for infrastructure and fine-tuning is a major advantage, but costs can accumulate, especially when using persistent dedicated GPUs or undertaking extensive model training.

- Pros: Transparent infrastructure pricing, a broad catalog of top open-source coder models, and advanced fine-tuning options.

- Cons: Users must manage model choice and performance tradeoffs, and costs can rise with persistent GPUs or heavy fine-tuning.

Website: https://www.together.ai/pricing

12. Amazon CodeWhisperer

Amazon CodeWhisperer establishes itself as a strong contender for the best LLM for coding, particularly for developers embedded in the AWS ecosystem. It acts as an AI coding companion directly within popular IDEs, offering real-time code suggestions, inline completions, and integrated security scanning. Its deep integration with AWS services and developer tooling simplifies building and deploying applications on the cloud, making it a natural choice for AWS-native projects.

For those using a vibe coding studio like the Dreamspace AI app generator to build applications that interact with AWS services, CodeWhisperer can significantly accelerate development by providing context-aware suggestions for SDKs and APIs. The included reference tracker, which flags code that resembles open-source training data, is a valuable feature for maintaining code originality and license compliance.

Key Considerations

The platform offers a generous free Individual tier, making it highly accessible for solo developers and those new to AI assistants. For teams, the Professional plan adds essential administrative controls and policy management. While its primary strength is its AWS integration, this focus means it may offer less general-purpose utility compared to more model-agnostic platforms.

- Pros: Excellent integration with AWS workflows and services, robust security scanning, and an attractive free tier for individual developers.

- Cons: Smaller model selection compared to multi-model platforms, providing the most value to developers already building on AWS.

Website: https://aws.amazon.com/codewhisperer

Top 12 Coding LLMs Comparison

Choosing Your Future: Integrating LLMs Into Your Workflow

The journey through the landscape of AI-powered coding tools reveals a powerful and diverse ecosystem. We've explored everything from the raw API power of OpenAI's GPT-4 and Anthropic's Claude, which excel at complex logic and generation, to the open-source flexibility of Meta Llama and Mistral AI, offering unparalleled control and customization for those willing to manage their own infrastructure. For developers embedded in specific clouds, managed services like AWS Bedrock and Azure OpenAI provide enterprise-grade security and seamless integration.

Ultimately, there is no single, universally best LLM for coding. The right choice is deeply personal and depends entirely on your project's unique demands, your team's skillset, and your overarching goals. The key is to match the tool to the task at hand.

Key Takeaways for Your Decision

To navigate this choice, consider these core factors:

- Project Requirements: Are you building a simple script, a complex decentralized application, or a mission-critical enterprise system? The scale and security needs of your project will guide you toward either a flexible API or a managed, compliant service.

- Developer Workflow: Do you prefer an integrated IDE companion like GitHub Copilot for real-time suggestions, or do you need a powerful backend model to drive a custom application? Your day-to-day coding habits matter.

- Control vs. Convenience: Self-hosting an open-source model like Llama offers maximum control but requires significant technical overhead. Conversely, using a platform like Google AI Studio or a service like Together AI provides convenience and abstracts away the complexity.

- The Vibe Coding Frontier: For many, especially in the Web3 space, the ultimate goal is to translate ideas into functional applications as fast as possible. This is where the paradigm shifts from code assistance to code generation. Platforms like Dreamspace are leading this charge, acting as a vibe coding studio that can generate entire on-chain applications from simple prompts, effectively bypassing the need for line-by-line coding.

Your Actionable Next Steps

The most effective way to find your ideal coding partner is through direct experimentation. Most of the services we've discussed offer free tiers, credits, or open-source playgrounds. Set aside time to test a few top contenders on a real-world coding problem you're currently facing. Compare their speed, accuracy, and the "feel" of their suggestions.

Integrating these powerful tools effectively is a critical step, but it's part of a bigger picture. For a broader look at enhancing your team's productivity and satisfaction, consider exploring other strategies for improving developer experience. As these LLMs become more ingrained in our workflows, the line between coding and creating will continue to blur, paving the way for a future where your vision is the only true bottleneck.

Ready to move beyond code assistance and start generating full-stack AI and Web3 applications from a single prompt? Explore Dreamspace, the AI app generator that turns your ideas into reality. Visit Dreamspace to see how our vibe coding studio can revolutionize your development process.