AI Models Comparison for On-Chain Apps

When you're comparing AI models for on-chain development, the whole game boils down to one thing: architecture versus use case.

You've got open-source powerhouses like Meta's Llama that give you total control to build something custom for niche blockchain jobs. On the other side, you have API-first models like OpenAI's GPT and Anthropic's Claude, which offer incredible general reasoning and are way easier to plug in. Your choice really hangs on that balance—how much control do you need versus how fast you want to move?

Choosing the Right AI for On-Chain Development

Picking an AI model is one of the most foundational decisions you'll make when building a dApp. Unlike a standard web app, the blockchain has its own set of brutal constraints, and they directly impact which model makes sense. An AI's performance can make or break the security, efficiency, and cost of your smart contracts.

The on-chain world is all about precision. Smart contracts, for example, absolutely require deterministic outputs—the same input has to produce the exact same output, every single time. That's not a natural behavior for most LLMs, which are designed for creativity. This guide gives you a developer-first framework for weighing the top options.

The AI market is just exploding right now, which makes this decision even more critical. Globally, the industry is projected to hit around $391 billion in 2025 and is on track to grow fivefold over the next five years. This isn't just hype; 83% of companies are calling AI a top business priority. You can dig into more of this data on the growth of AI and its impact over at explodingtopics.co

Evaluating the Top Contenders: GPT, Claude, and Llama

To make any real ai models comparison, you have to get past the marketing and look at the core philosophy behind each one. How they were built and what they were built for directly impacts their performance on the chain. We're going to dive into the three big players: OpenAI's GPT series, Anthropic's Claude 3 family, and Meta's open-source Llama 3.

Each model comes with its own distinct flavor. Their architecture, the data they were fed, and their intended purpose create clear trade-offs. You're constantly balancing versatility against safety and customizability. Getting a feel for these differences is the first real step in picking the right tool for your blockchain project.

OpenAI's GPT-4: The Generalist Powerhouse

For a long time, OpenAI's GPT-4 has been the one to beat for general-purpose AI. Its biggest win is its incredible versatility and a mature API ecosystem that just works. For developers, this means you can throw almost anything at it—from spinning up boilerplate smart contract code to debugging tricky transaction logic in plain English.

The real advantage of GPT-4 for on-chain development is how reliable and easy it is to plug into your workflow. It's a fantastic starting point for teams that need a powerful, ready-to-go solution without the headache of managing the model yourself. This makes it perfect for quickly prototyping and building dApps where you need solid, multi-step reasoning more than niche, specialized knowledge.

The true power of the GPT series is its accessibility. The robust API and extensive documentation mean developers can get from concept to a working prototype faster than with almost any other model, making it a go-to for many initial builds.

Anthropic's Claude 3: The Security-Focused Analyst

Anthropic built its Claude 3 family—Haiku, Sonnet, and Opus—from the ground up with a laser focus on AI safety and constitutional principles. This isn't just marketing fluff; it results in models that are genuinely less likely to generate harmful or buggy outputs. That’s a massive plus when you're working with immutable smart contracts where one mistake can be catastrophic.

But Claude's killer feature for on-chain work is its absolutely massive context window. Being able to process hundreds of thousands of tokens at once makes it an auditor's dream. A developer can literally dump an entire smart contract repository into Claude to hunt for vulnerabilities, trace dependencies, and check for code consistency—a job that's flat-out impossible for models with smaller context limits. To really get the most out of models like these, you have to understand prompt engineering.

Meta's Llama 3: The Open-Source Customizer

Meta's Llama 3 is playing a completely different game. Since it's open-source, it gives you a level of control and transparency you just can't get from a closed API. You’re not just a consumer; you can actually get under the hood to inspect, modify, and fine-tune the model to fit your specific needs.

This is a game-changer for decentralized projects. You can train Llama 3 on your own proprietary on-chain data to forge a highly specialized tool for things like fraud detection or spotting market trends. Plus, the community is buzzing, constantly pumping out new techniques and optimizations. For any team that puts a premium on data sovereignty and bespoke performance, Llama 3 is often the obvious choice. This is the kind of power that platforms like Dreamspace, an AI app generator, can help simplify, closing the gap between raw open-source potential and getting your app built fast.

Comparing Performance and Technical Architecture

When you’re trying to pick an AI model for on-chain development, you have to look past the marketing hype. The real story is in the performance benchmarks and the core architecture. These are the factors that determine whether a model can handle the precise, high-stakes work of building on the blockchain.

We’re going to dig into the tangible metrics—reasoning, logic, and especially code generation. Benchmarks like HumanEval for coding and MATH for logical reasoning give us a clear, standardized way to see what these models are actually capable of. A model’s score here is often a dead giveaway for how well it can write secure and efficient smart contracts.

Core Architectural Differences

The biggest fork in the road is choosing between closed-source API models and their open-source cousins. Honestly, this is probably the most critical decision you'll make for any on-chain project.

- Closed-Source (GPT & Claude): These are plug-and-play. You get an API key, and OpenAI or Anthropic handles all the messy infrastructure, updates, and maintenance. The main draw here is reliability and ease of use. You get top-tier performance without needing an in-house ML team to keep the lights on.

- Open-Source (Llama): Meta’s Llama series hands you the keys to the kingdom by giving you direct access to the model weights. This means you get total flexibility and control. You can fine-tune the model on your own proprietary data—like your project’s unique transaction patterns—to build something highly specialized. The catch? You’re on the hook for hosting and maintaining it all yourself.

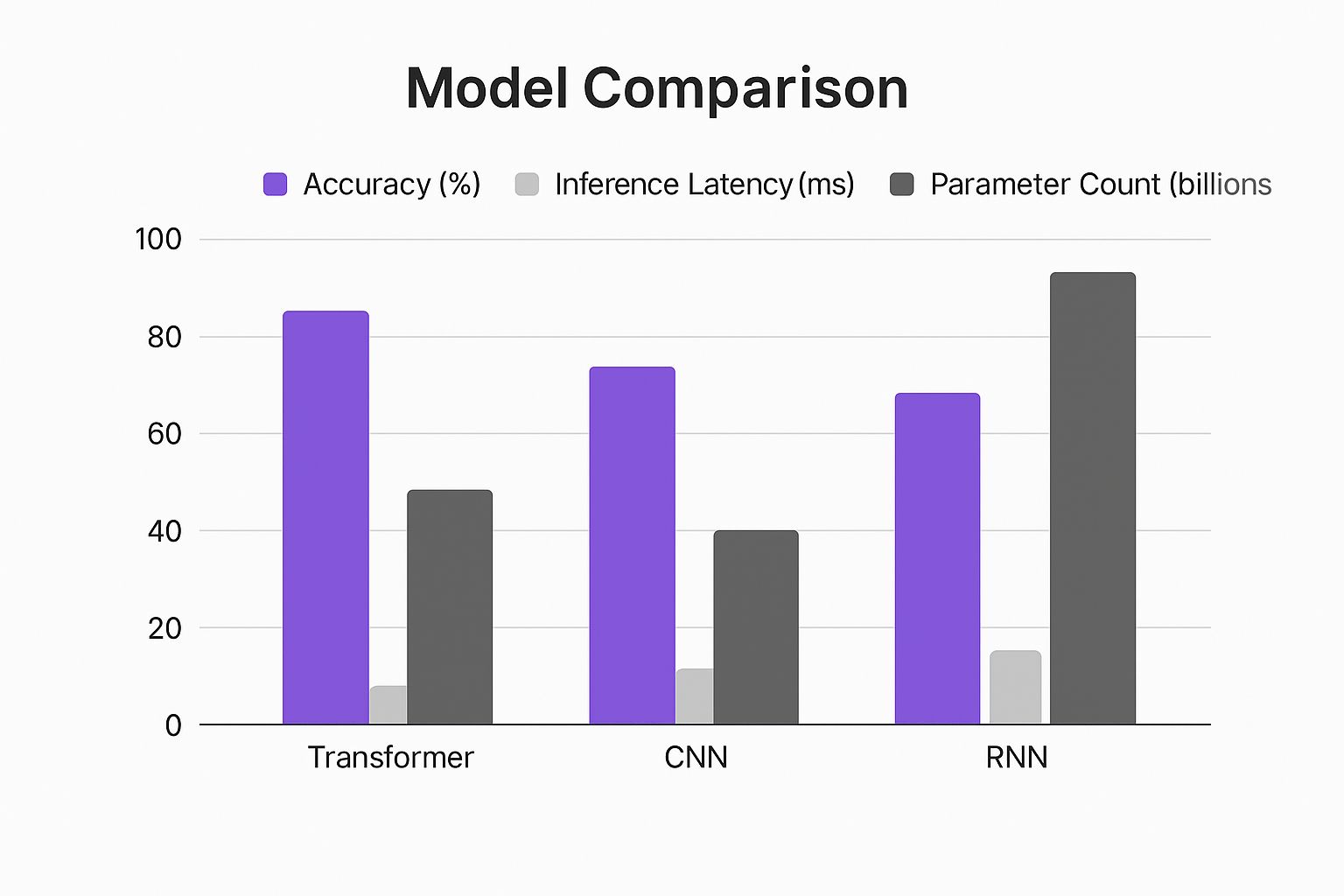

This infographic gives a great visual of the performance trade-offs you're making with different foundational AI architectures, like the Transformers that power models such as GPT and Claude.

As you can see, Transformer models are king when it comes to accuracy, but that performance comes at the cost of higher latency and more parameters. It's a classic balancing act.

Benchmarking On-Chain Capabilities

To make an objective call, we have to lean on benchmarks. For our purposes, we’re zeroing in on two key areas: logical reasoning and code generation.

Anthropic's Claude 3 Opus often shines in tasks that demand extreme precision. It was designed from the ground up with a focus on safety and cutting down on hallucinations, which really shows. On the other hand, OpenAI's GPT-4 is still a beast at general reasoning and creative problem-solving, making it a go-to for spinning up novel smart contract logic from scratch. For a closer look at this, you should check out our guide on the finer points of AI for code generation.

The global AI race is also a huge win for developers because it’s pushing everyone to get better, faster. Back in 2023, the top U.S. models were beating their Chinese counterparts by 31.6 percentage points on the HumanEval coding benchmark. Fast forward to the end of 2024, and that lead has been slashed to just 3.7 points. The field is advancing at an incredible pace, meaning more high-quality options are hitting the market all the time. You can read the full breakdown of these AI model performance trends in the AI Index Report.

The Critical Role of Context Windows

A model's context window—how much information it can juggle at once—is a huge deal for on-chain work. It’s not just a nice-to-have feature; a massive context window opens up completely new possibilities.

Claude 3’s ability to process up to one million tokens at once is a genuine game-changer for security audits. A developer can literally feed it an entire dApp’s codebase and ask it to find cross-contract vulnerabilities or check for consistency. For models with smaller windows, you’d have to chop that code into tiny, disconnected pieces, losing all the important context.

For any project where security and absolute thoroughness are the top priority, a massive context window is non-negotiable. It elevates the AI from a simple coding assistant to a true analytical partner that can grasp the entire scope of a complex system.

Understanding these architectural and performance details is key to matching the right model to your project's needs. And if you want to skip the headache of integrating these models directly, an AI app generator like Dreamspace is a great option. As a vibe coding studio, it gives you a much simpler path to building on-chain apps, letting you focus on your idea instead of the AI plumbing underneath.

Practical On-Chain Use Cases and Scenarios

Benchmarks and architecture deep dives are great, but the real test for any AI model is how it performs in the wild. This is where an AI models comparison shifts from abstract data points to tangible outcomes for on-chain development. The best choice between models like Claude, GPT-4, and Llama often snaps into focus when you look at specific, real-world jobs.

Each model brings something different to the table, making it a natural fit for certain blockchain tasks. A model's ability to digest huge amounts of text isn't just a neat feature—it’s the key to unlocking comprehensive security audits that were once out of reach. Likewise, the freedom to fine-tune a model can transform a generalist tool into a highly specialized fraud detection engine.

Claude 3 Opus for Comprehensive Smart Contract Audits

Picture a major DeFi protocol on the verge of a massive upgrade. Their codebase is a complex web of interconnected smart contracts, securing millions in user funds. A single, overlooked vulnerability would be catastrophic.

- The Problem: The team needs a bulletproof security audit to sniff out potential exploits, reentrancy attacks, or logic flaws across the entire repository. Manual audits are slow and can easily miss subtle bugs that only pop up when contracts interact.

- The Solution: They turn to Claude 3 Opus. Its standout feature—a massive one-million-token context window—lets them feed the entire codebase into a single prompt.

- The Rationale: Models with smaller context windows force you to break the code into disconnected pieces, losing the big picture. Claude, however, can see the whole system at once. It can trace function calls across contracts, understand shared state, and spot vulnerabilities that are invisible in isolation. Its strong safety alignment also means it’s less likely to suggest insecure code fixes.

This is a perfect example of how a specific architectural strength—the context window—directly creates a critical on-chain security advantage.

GPT-4 for Complex Contract Generation from Natural Language

A startup is building a DAO with a sophisticated governance structure. They need to implement token-weighted voting, time-locked proposals, and treasury management, but they're working with a lean development team.

For developers aiming to accelerate their build process without sacrificing complexity, GPT-4’s advanced reasoning is a powerful ally. It excels at translating intricate business logic into functional, multi-step smart contract code.

The team uses GPT-4 to generate the foundational smart contracts directly from detailed English prompts. They can describe an entire workflow, like, "A proposal needs 51% of votes from a 20% quorum and can only be executed after a 72-hour review period." GPT-4 can then draft the corresponding Solidity code. Its knack for multi-step reasoning allows it to handle the tangled logic these systems require. You can learn more about structuring these requests to get better results when you code with AI.

Llama 3 for Fine-Tuned DeFi Fraud Detection

A decentralized exchange (DEX) is getting hammered by sophisticated wash trading and front-running bots. Their generic detection tools just can't keep up with the attackers' evolving tactics.

Adapting AI models to specific data is crucial for practical on-chain apps. For instance, understanding how to approach training ChatGPT on custom data can turn a general tool into a specialist one. This is exactly where open-source models win. The DEX team picks Llama 3 because of its open architecture. They pull together a massive, proprietary dataset of on-chain transactions and start labeling suspicious patterns.

By fine-tuning Llama 3 on their own data, they build a custom fraud detection system that understands the unique trading patterns on their platform. This bespoke model blows off-the-shelf solutions out of the water, identifying malicious activity with over 95% accuracy in real time.

The latest 2025 benchmarks sharpen these distinctions even further. Claude 4’s impressive 72.7% score on the SWE-bench for software engineering confirms its top-dog status for coding tasks. Meanwhile, models like DeepSeek R1 are making noise by delivering comparable reasoning power at a fraction of the cost, making them a smart choice for budget-conscious projects. The takeaway is clear: the "best" model is simply the one that fits the job at hand—a principle that platforms like Dreamspace, a leading vibe coding studio, help developers embrace.

Analyzing Cost and Blockchain Integration Hurdles

Beyond raw performance, a couple of very real-world hurdles can make or break an on-chain AI project: budget and the sheer difficulty of implementation. An amazing idea doesn't mean much if it’s too expensive to run or too clunky to connect to a blockchain. This is where you have to get pragmatic with a cost-benefit analysis in any serious ai models comparison.

The way you pay for top-tier AI couldn't be more different across the board. API services like OpenAI's GPT-4 and Anthropic's Claude run on a simple pay-as-you-go model. You pay per token, which keeps upfront costs low and makes expenses predictable—perfect for startups that need to scale their spending as they grow. You only pay for what you use, and they handle all the messy infrastructure on the back end.

On the other hand, an open-source model like Meta's Llama 3 flips the script. The model itself is free to download, but the total cost of ownership is anything but zero. You’re on the hook for the heavy infrastructure costs—high-end GPUs, storage, and all the maintenance that comes with it. This path demands a serious upfront investment and ongoing operational cash, but it can be cheaper if you’re operating at a massive scale.

The True Cost of Open Source

It's easy to get lured in by Llama’s "free" sticker price, but the real costs are lurking in the operational details.

- Infrastructure: These models don't run on just any machine. They need powerful, expensive GPU servers that you either have to buy outright or rent.

- Maintenance: You'll need an expert—or a whole team—to manage updates, patch security holes, and just keep the lights on.

- Scalability: As your dApp gets more popular, you have to provision and manage an increasingly complex infrastructure to keep up with demand.

This whole trade-off puts a huge premium on having the right people in-house. If you don't have a dedicated machine learning operations (MLOps) team, the simplicity and reliability of a managed API will almost always win out over the potential long-term savings of hosting it yourself.

The decision is a classic "build vs. buy" dilemma. An API buys you speed and simplicity. Self-hosting buys you control and potential cost savings at scale—but only if you can handle the technical lift.

Overcoming Blockchain Integration Challenges

Getting an AI model to talk to a blockchain is notoriously tricky. It introduces a unique set of headaches that go way beyond a simple API call and challenge the very nature of how blockchains work.

One of the biggest blockers is latency. Blockchains are intentionally slow, but users expect AI to give them answers instantly. Finding a way to manage that timing gap for a smooth user experience, without compromising on-chain security, is a major engineering challenge.

Then there’s the problem of deterministic outputs. Smart contracts demand that the same input always produces the exact same output. But most large language models are non-deterministic; they can give slightly different answers to the same prompt. This means you have to build a sophisticated off-chain system to wrangle the AI and guarantee consistent results before anything touches a smart contract. To really get into the weeds on this, check out our guide on the fundamentals of blockchain application development.

You also need robust oracle solutions to securely pipe AI-generated data into an on-chain environment. Oracles act as a secure bridge, but they add yet another layer of complexity and a potential point of failure.

This is where a vibe coding studio like Dreamspace changes the game. As an ai app generator, it abstracts away these painful integration problems. Dreamspace gives you pre-built, optimized workflows that handle the headaches of latency, determinism, and secure data transfer. This frees you up to focus on your app's logic instead of wrestling with gnarly infrastructure.

Which AI Model Is Right for Your Project

So, which one do you choose? After digging through this AI models comparison, the real answer comes down to what your on-chain app actually needs. There's no magic bullet—just the right tool for the right job. Your priorities are everything here.

Each model brings something unique to the table. The trick is to be brutally honest about what matters most: raw power, ironclad security, or the freedom to build it your way.

Recommendations Based on Project Priorities

Let's cut to the chase. Here’s how I’d break it down based on what you're trying to build.

- For State-of-the-Art Performance: If you need a battle-tested, high-performance engine with incredible reasoning skills right out of the box, the GPT series is a no-brainer. Its robust APIs and massive community support mean you can get complex logic up and running fast.

- For High-Stakes Security and Analysis: When you’re dealing with smart contract audits or anything that absolutely cannot fail, Claude's architecture is built for the job. That massive context window is a game-changer for deep-diving into code and spotting vulnerabilities others might miss.

- For Maximum Customization and Privacy: Need total control? If you're fine-tuning on proprietary on-chain data and want to own your stack from top to bottom, Llama is your best bet. Its open-source spirit is perfect for specialized projects where you can't afford to be locked into someone else's ecosystem.

The smartest move is to stay agile. The model that gets your v1 out the door might not be the one that carries you to a million users. The real breakthroughs happen when you’re free to experiment.

This is exactly why avoiding vendor lock-in is so critical. Using an AI app generator that lets you switch between models as your project grows isn't just a convenience—it's a massive strategic advantage.

For anyone looking to test drive different models without getting bogged down in integration headaches, Dreamspace is the answer. It’s a vibe coding studio designed to help you find the perfect AI for your on-chain app and ship it—fast. Generate your next project at https://dreamspace.xyz.